Modern Optimization Theory and Applications in Optimal Control

2024 Dean Undergraduate Research Project

Shortcuts | Slides

Overview

We initiate our project by studying fundamental Optimization tools, including First and Second Variation methods for single and multi-variable scenarios. We progress to exploring Optimal Control Theory, focusing on Controllability, the Bang-bang Principle, Linear TimeOptimal Control, the Pontryagin Maximum Principle, and Dynamic Programming. We adapt real-life examples and formulate numerical solutions and visualization to them. Our final investigation extend from Optimal Control Theory to its utilization in diverse fields such as Game Theory, Stochastic Calculus, and Partial Differential Equations. You can check our final academic report here by contacting me at kl4747@nyu.edu to gain authroization. If you have any question, idea or seeking collaboration with us, feel free to contact me through my email.

Theory

Basic Problem

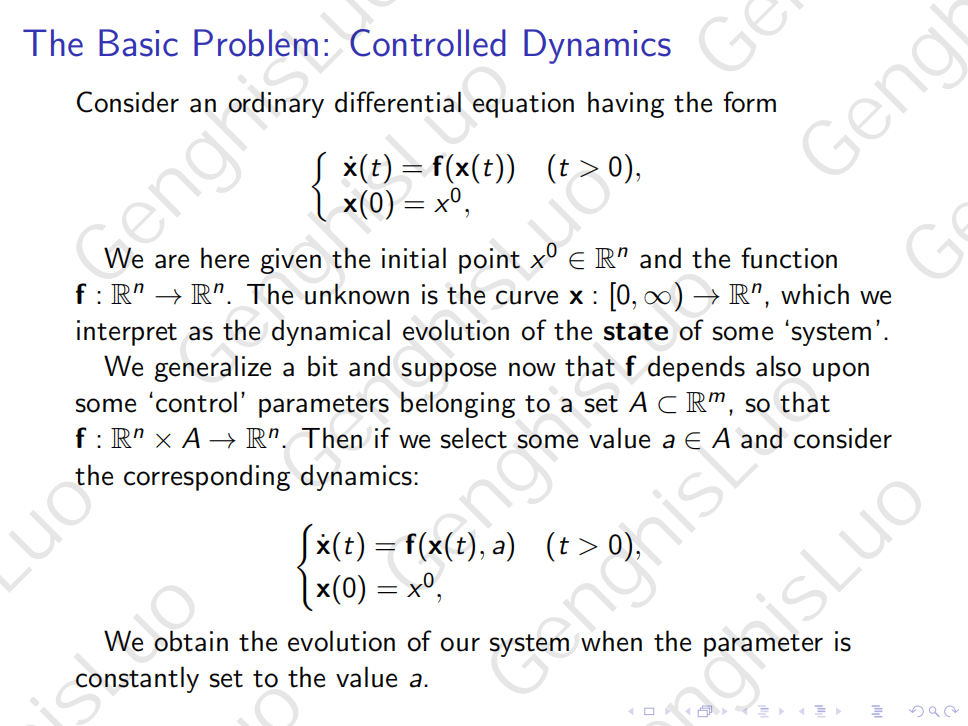

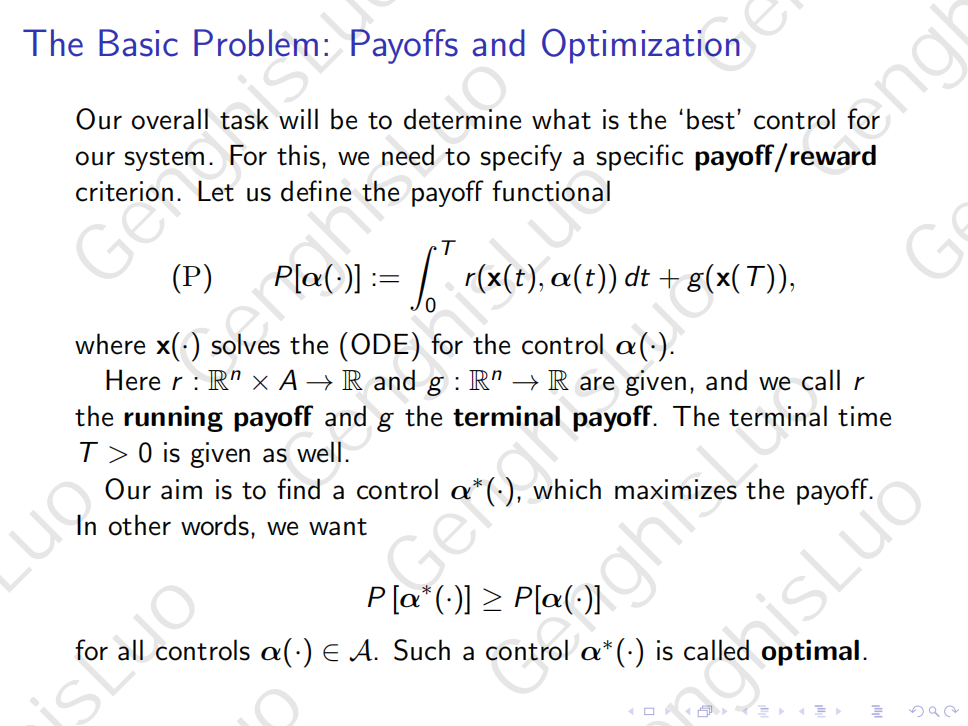

The basic problem in optimal control problem can be discribed as a variation of an ODE optimization problem by adding a new control function into the system.

The overall task of the optimal control problem is to determine the optimal control that minimizes the payoff functional we determined. Note that maximizing and minimizing are the same in essence as they can be transferred to each other by adding negative sign to the payoff functional.

Controllability

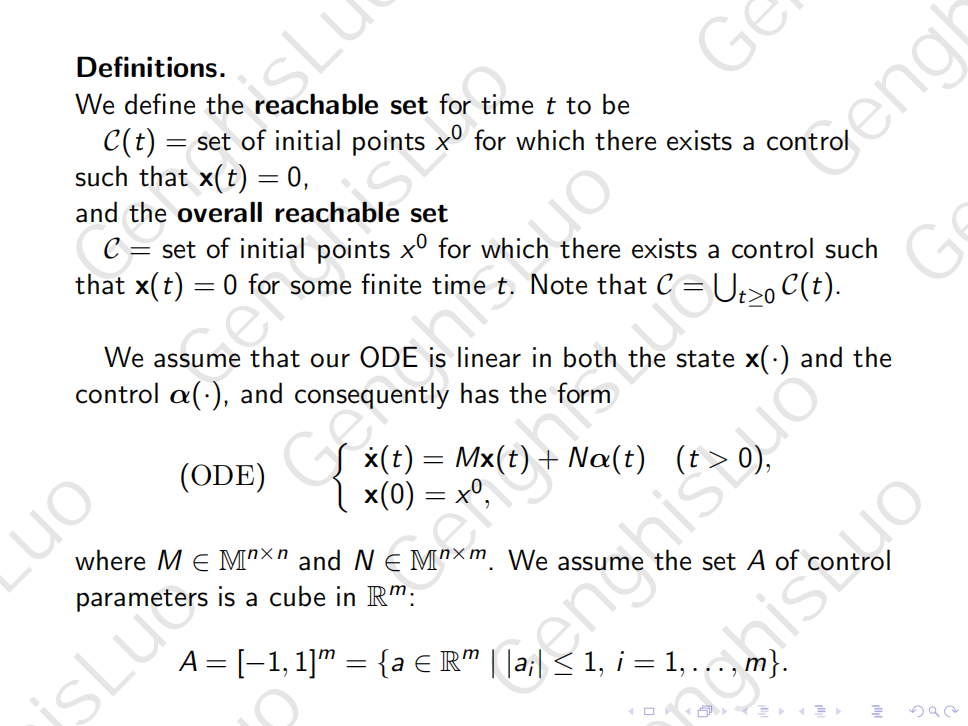

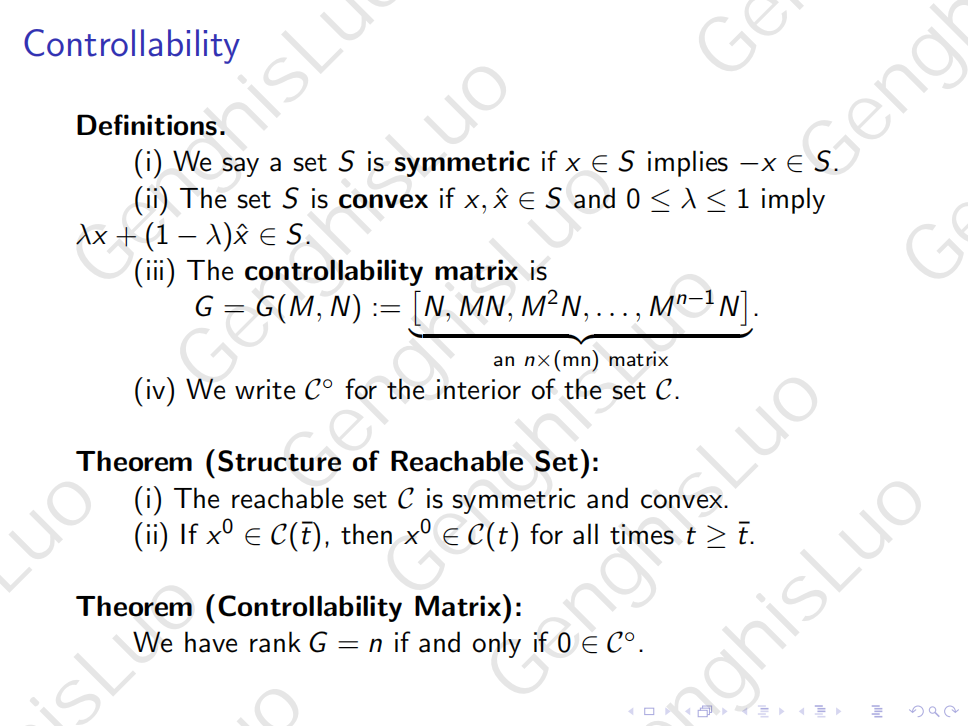

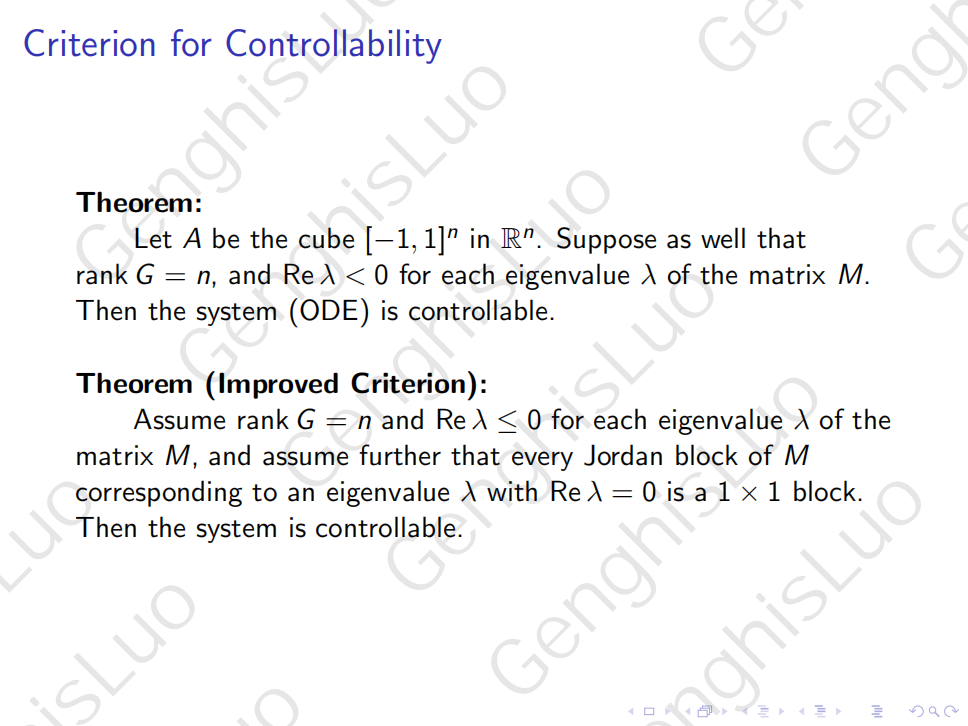

As lots of problems in reality and we consider contains constraints on initial points and on target sets, it is natural for us to ask whether there actually exists such a control that the system is solvable, in other words, whether the problem is controllable or not.

We then give several definitions and raise two controllability criteria.

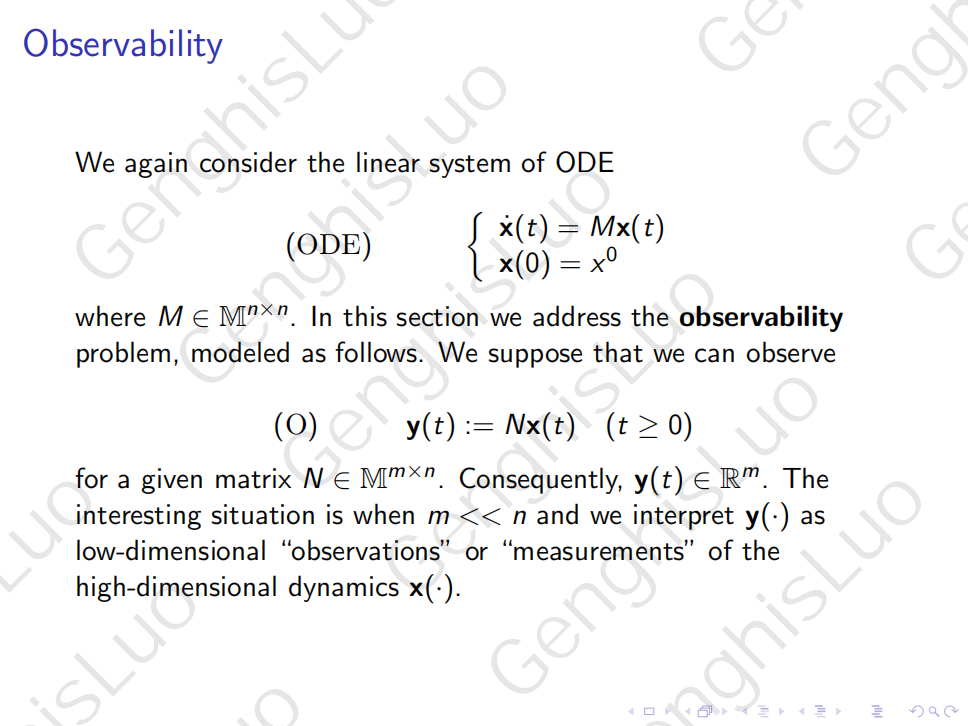

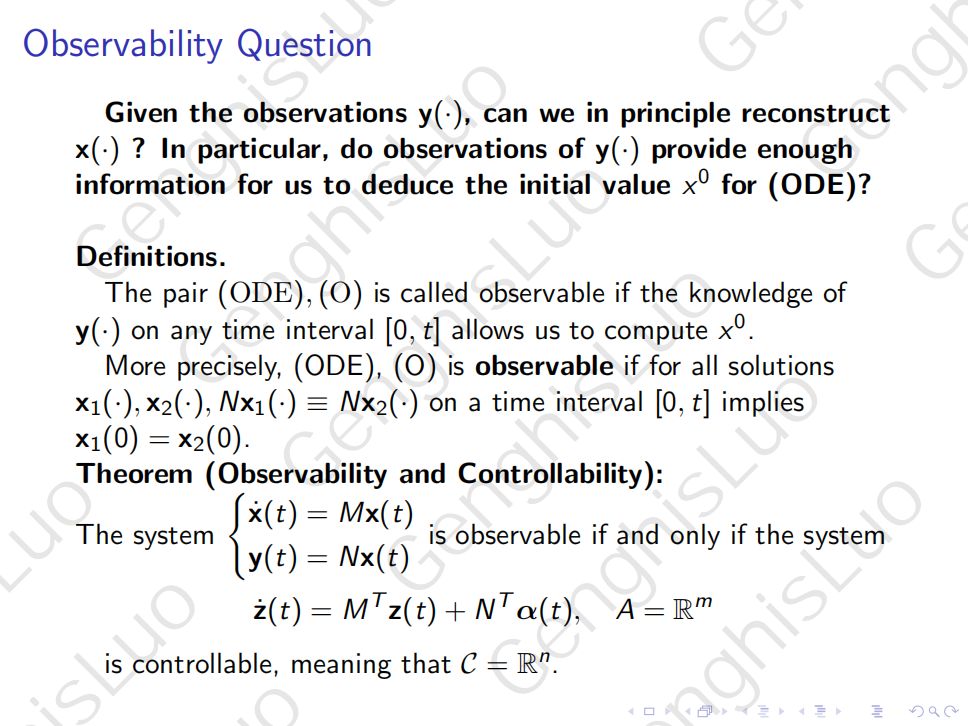

Observability

Also, in some situations we can only observe a transformation of x in reality, therefore another natural question we ask is that whether the observations we have can in principle reconstruction the reponse. In particular, whether our observation enables us to deduce the initial value for the uncontrolled system. This is called the observability. We raise several definitions and a criteria to observability, which is surprisingly highly connected to controllability.

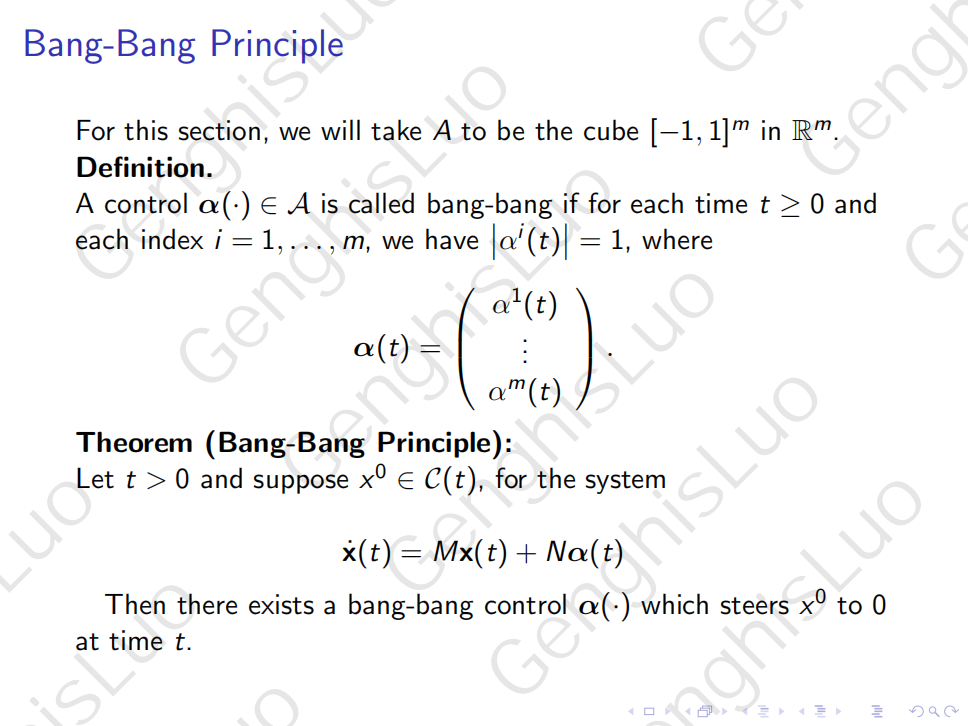

Bang-Bang Principle

There is an interesting theorem on controls for time linear optimal control problems, which is called Bang-Bang Principle.

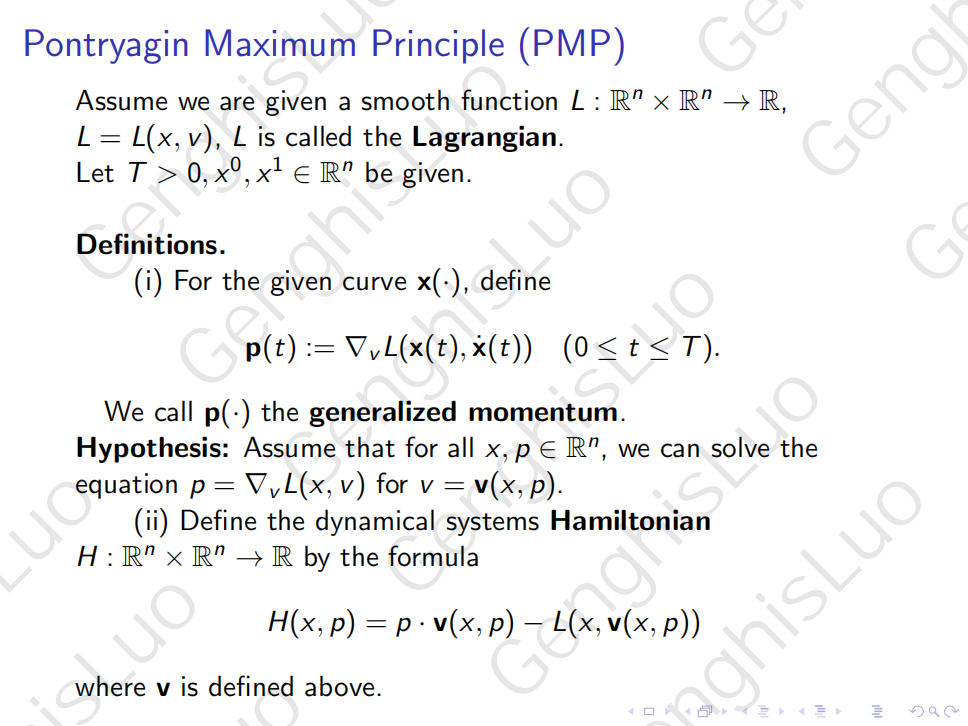

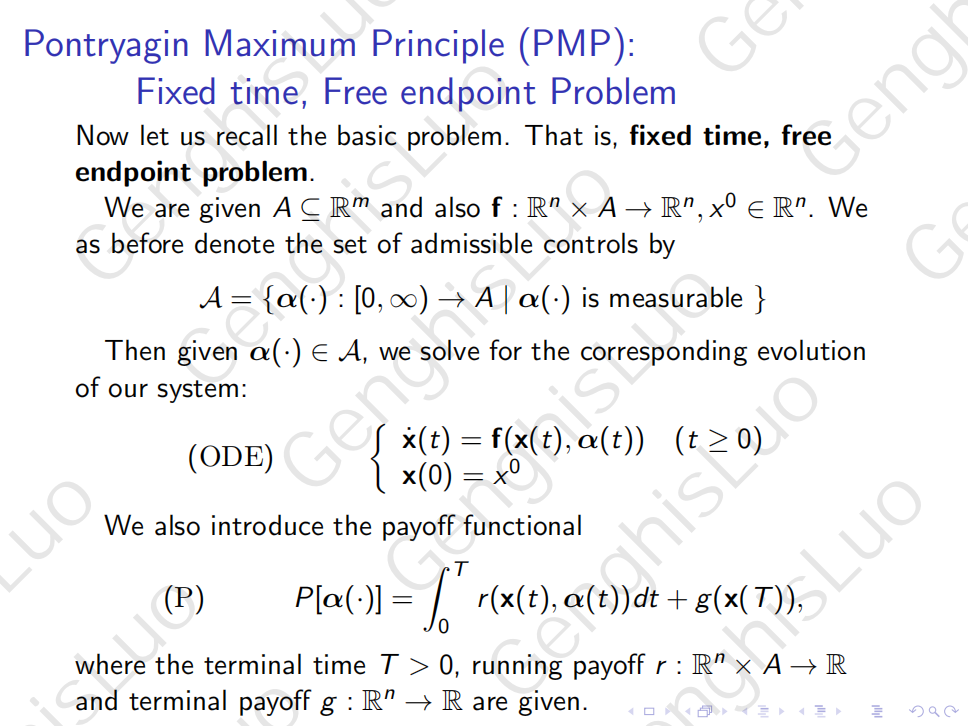

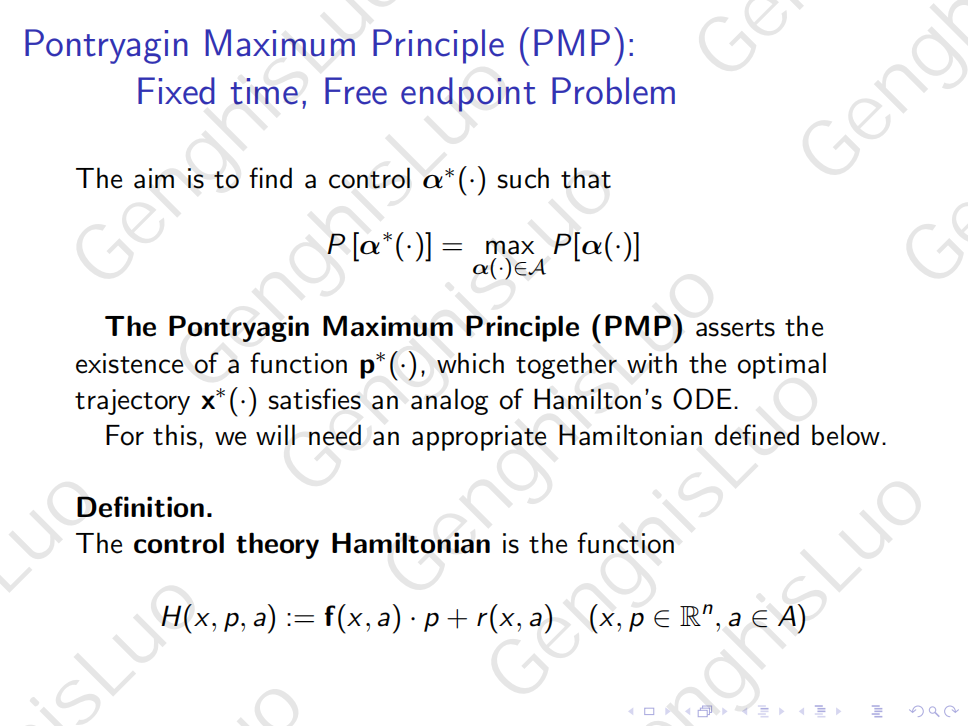

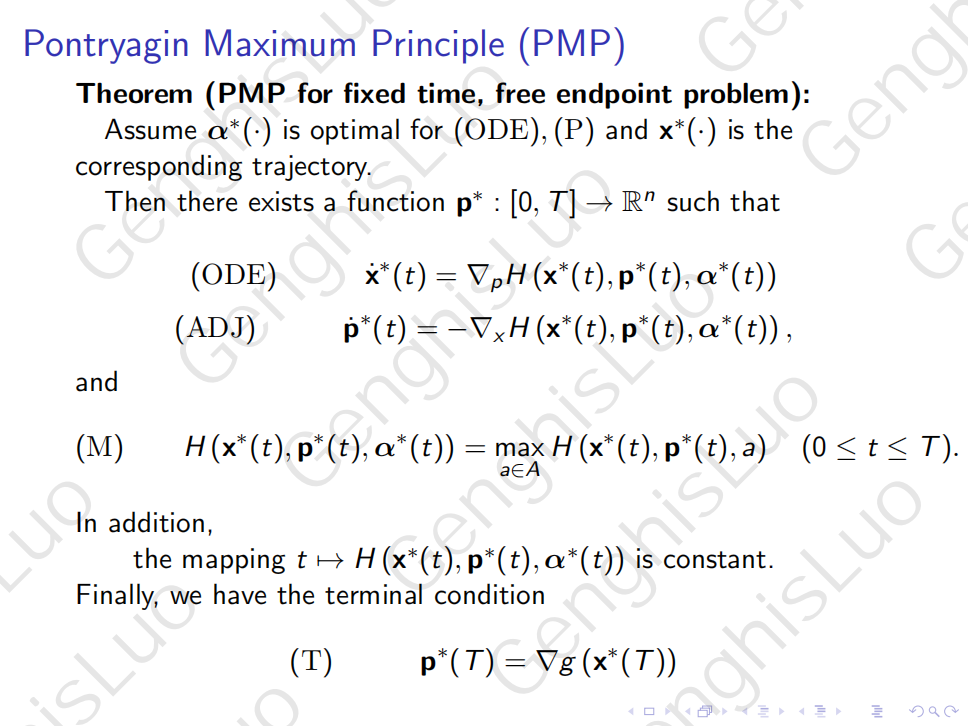

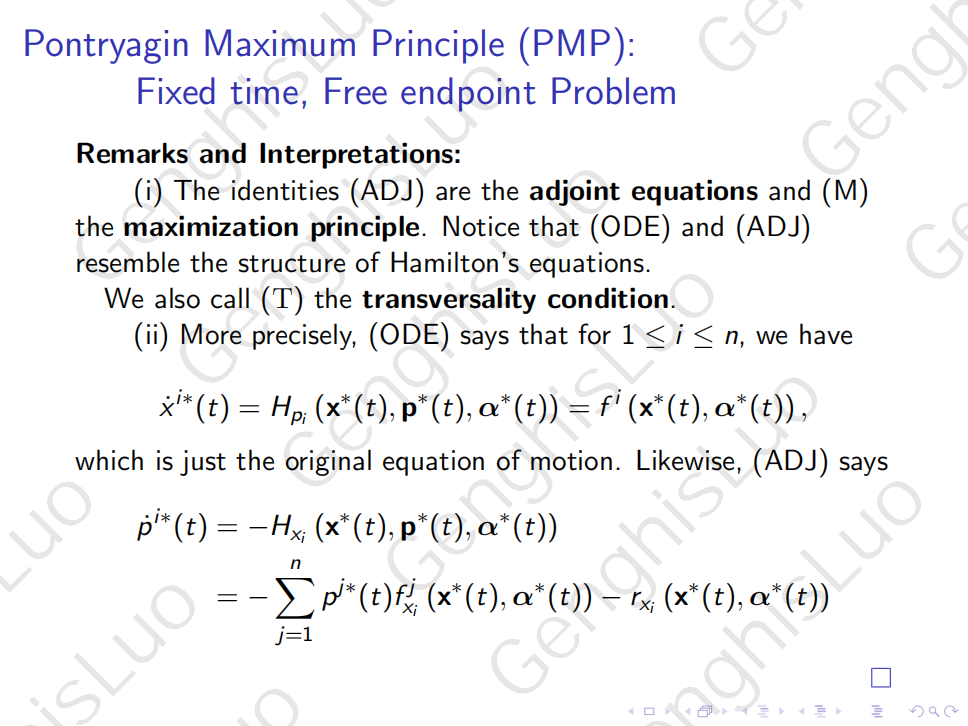

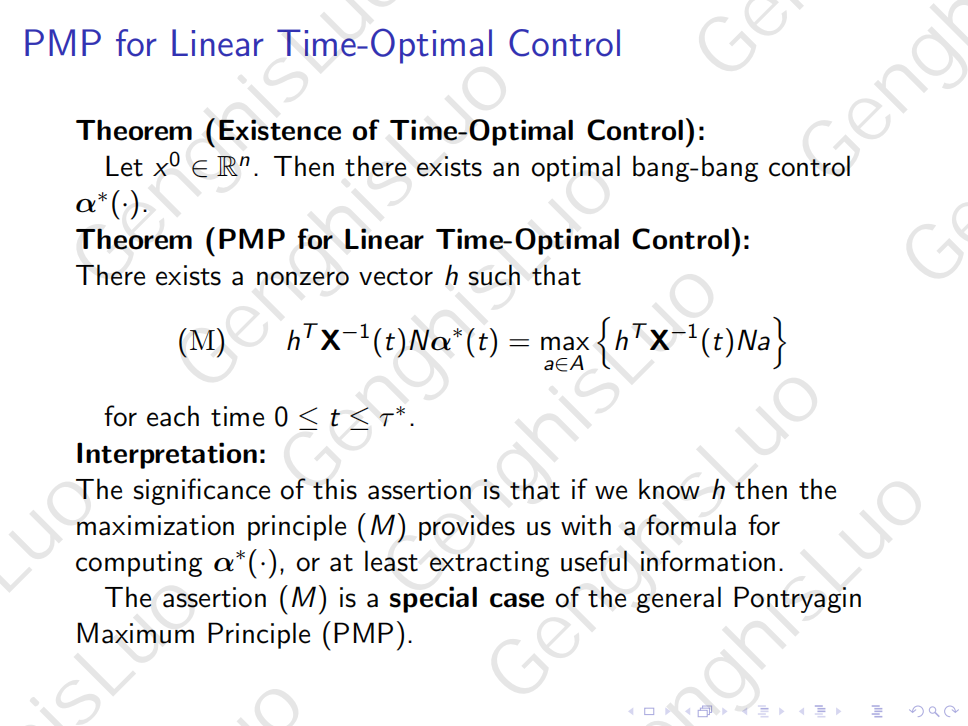

Pontryagin Maximum Principle

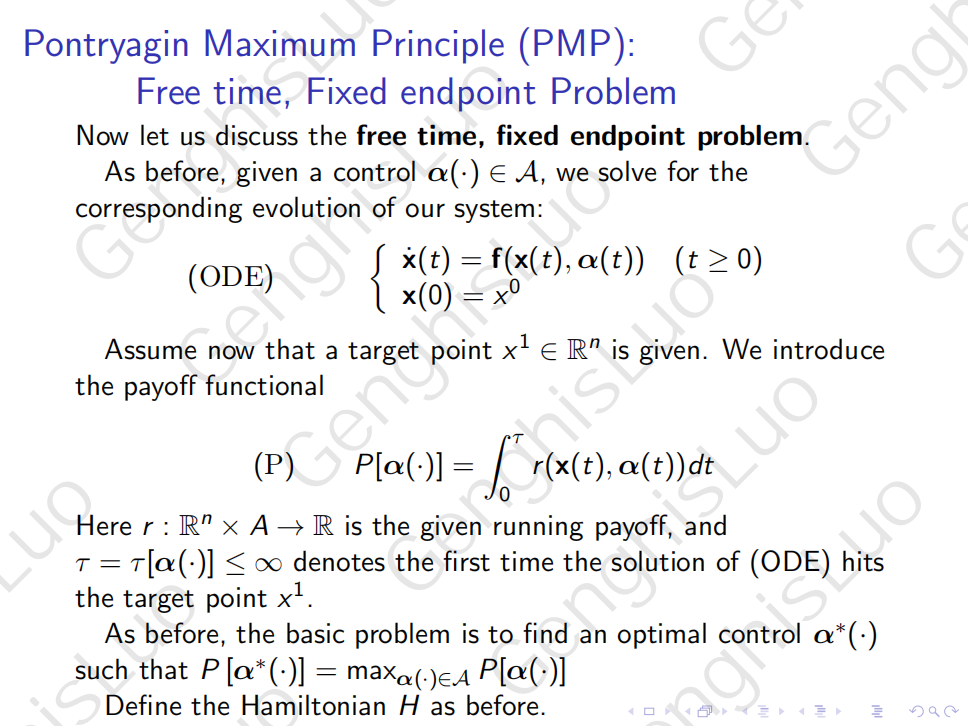

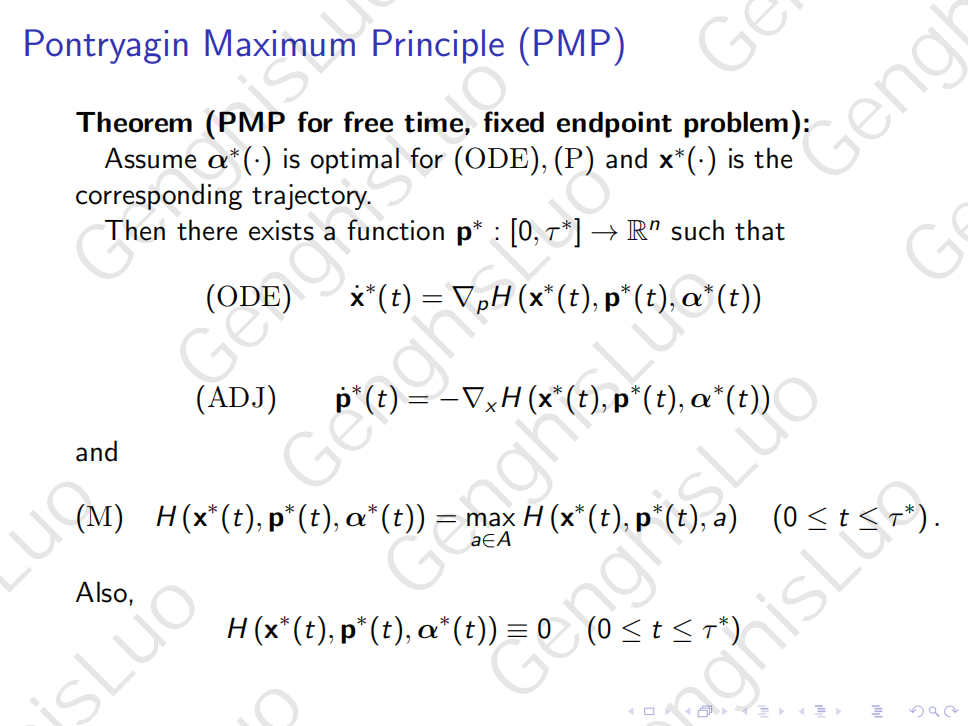

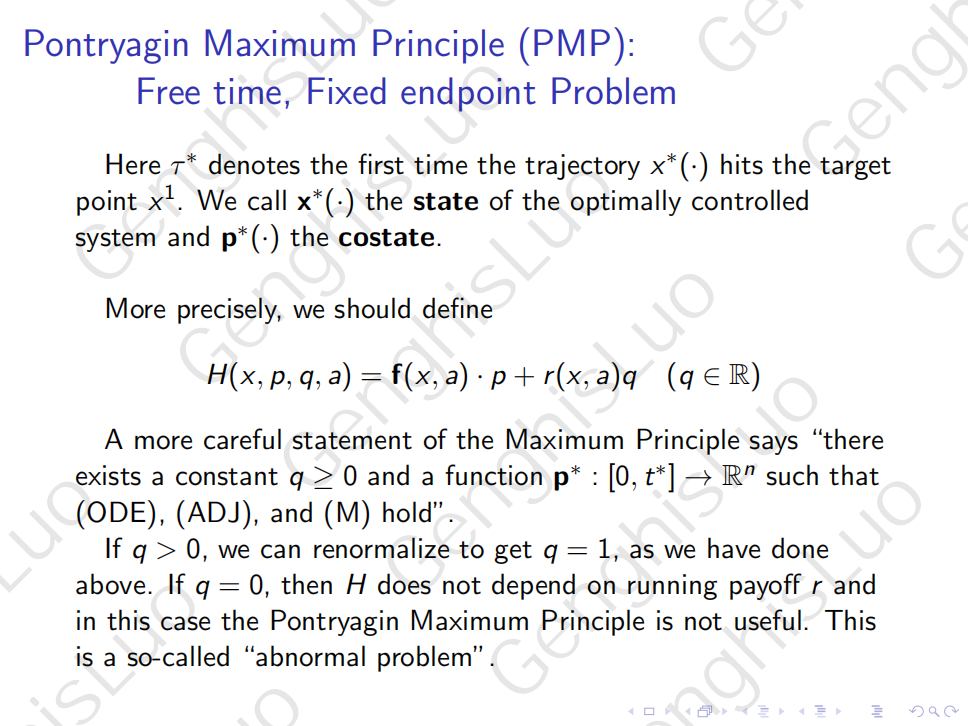

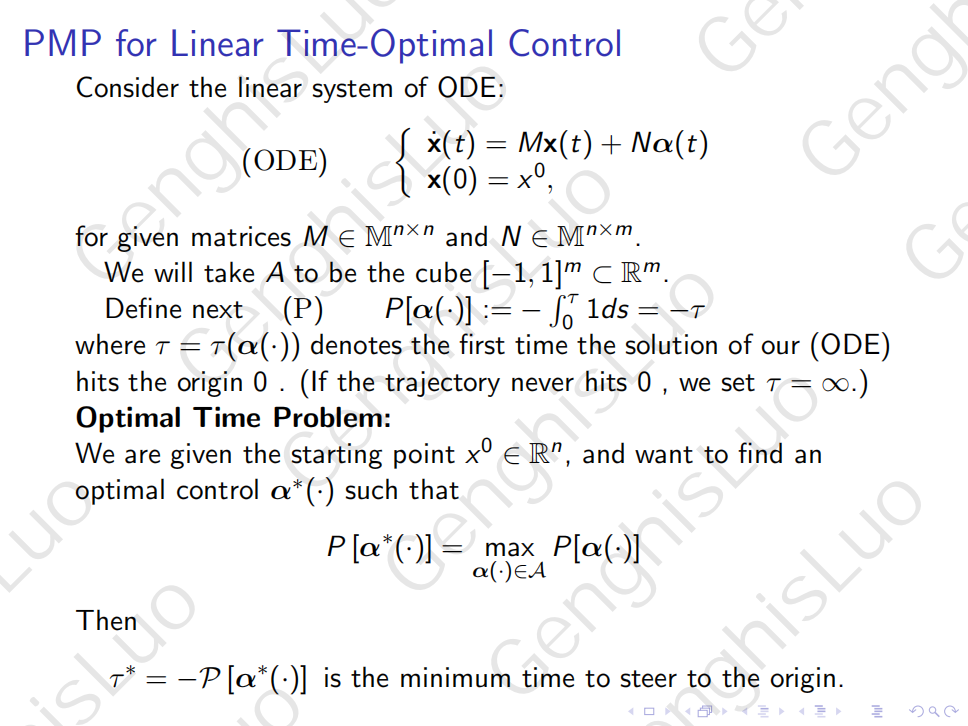

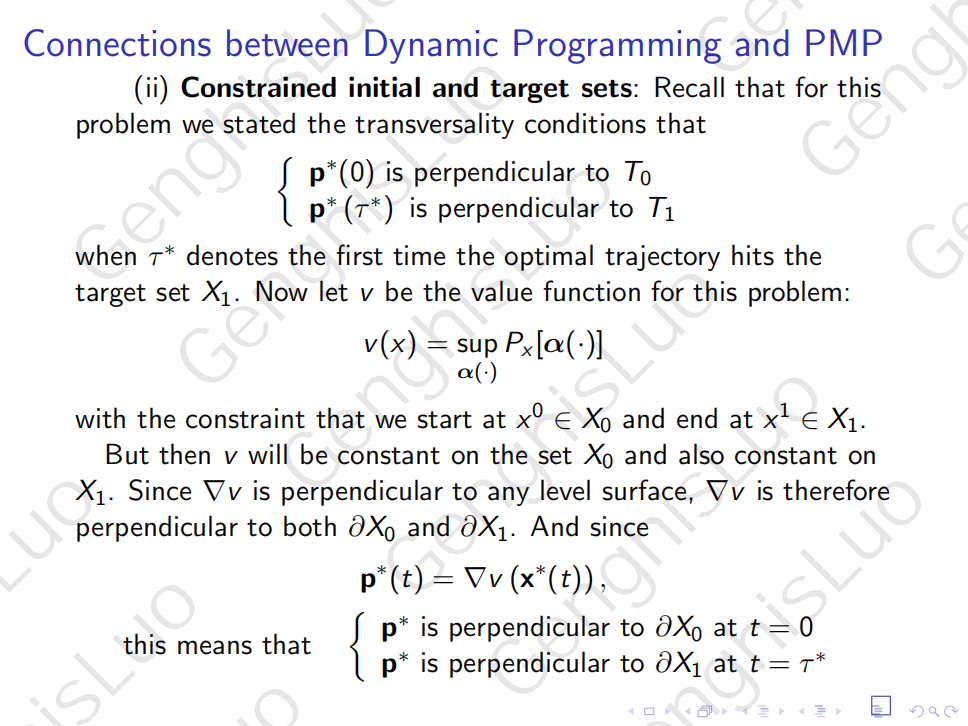

The Pontryagin Maximum Principle turns out to be one of the most important tools in Optimal Control Theory. The motivation of such principle is to transfer the whole system into a costate Hamilton system so as to reflect properties of the optimal control from a different angle, which actually gives great amounts of new information. This idea is derived from a similar process when dealing with optimization. We raise some definitions, declare the Pontryagin Maximum Principle under three cases of constraint, specilize it in the time linear case, and give a proof to it.

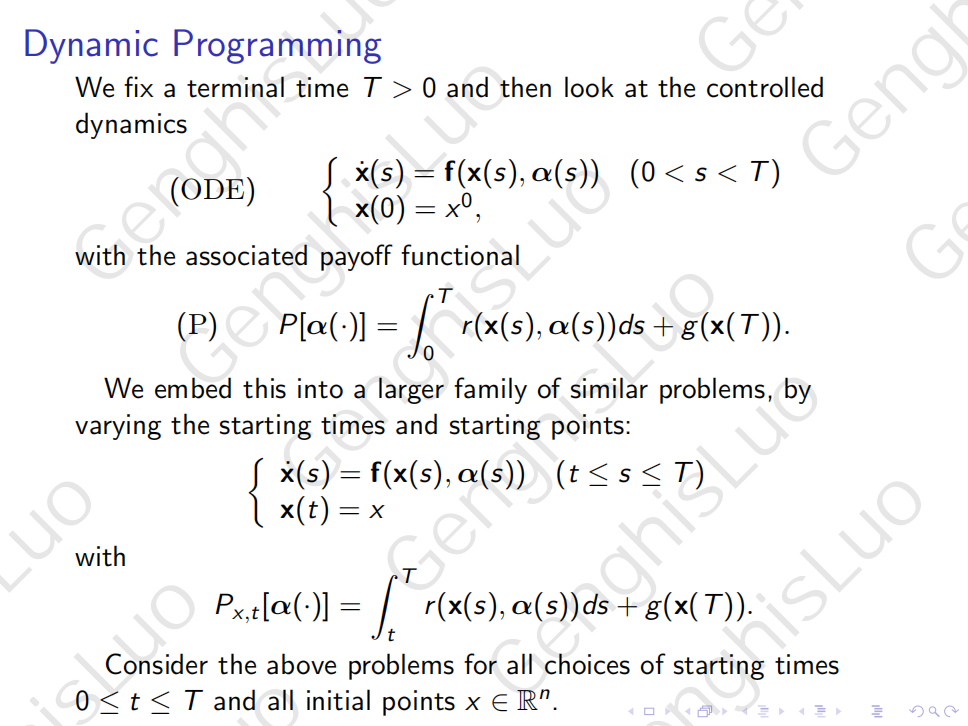

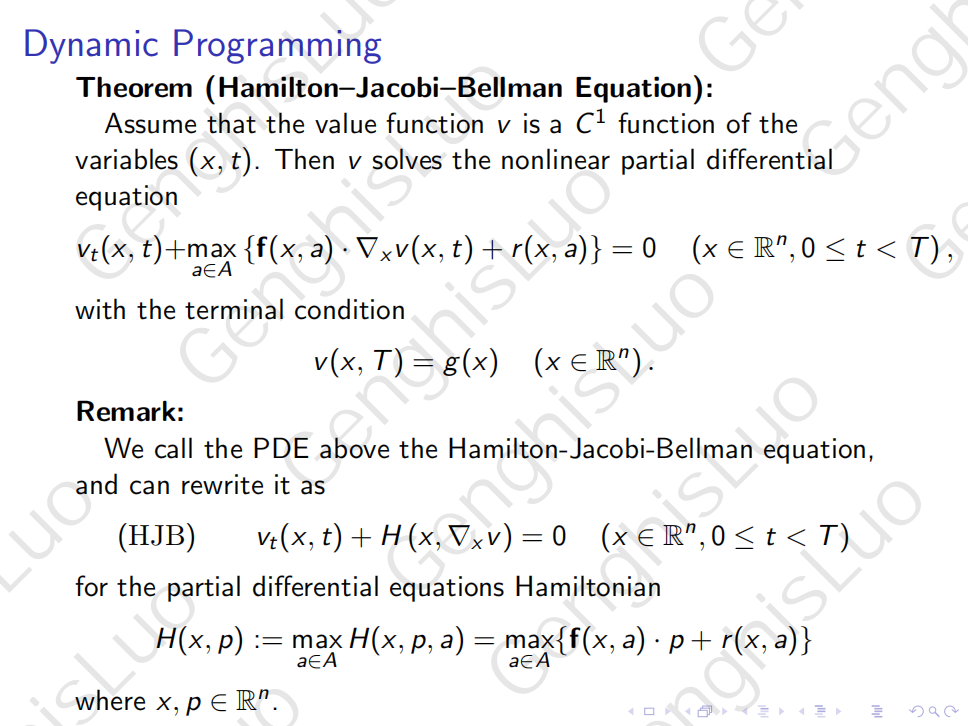

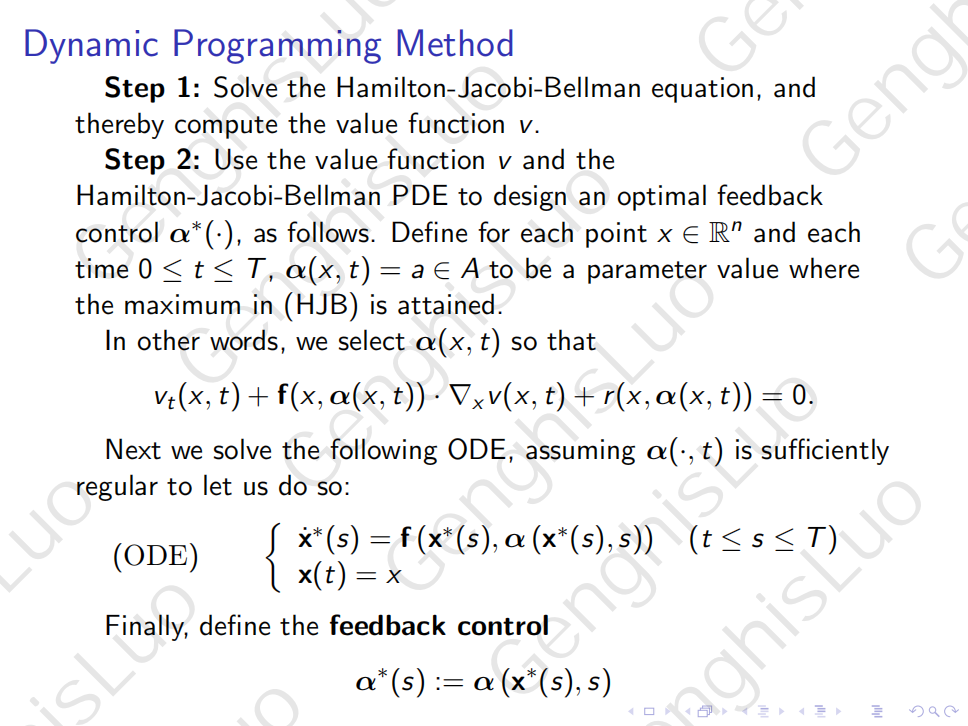

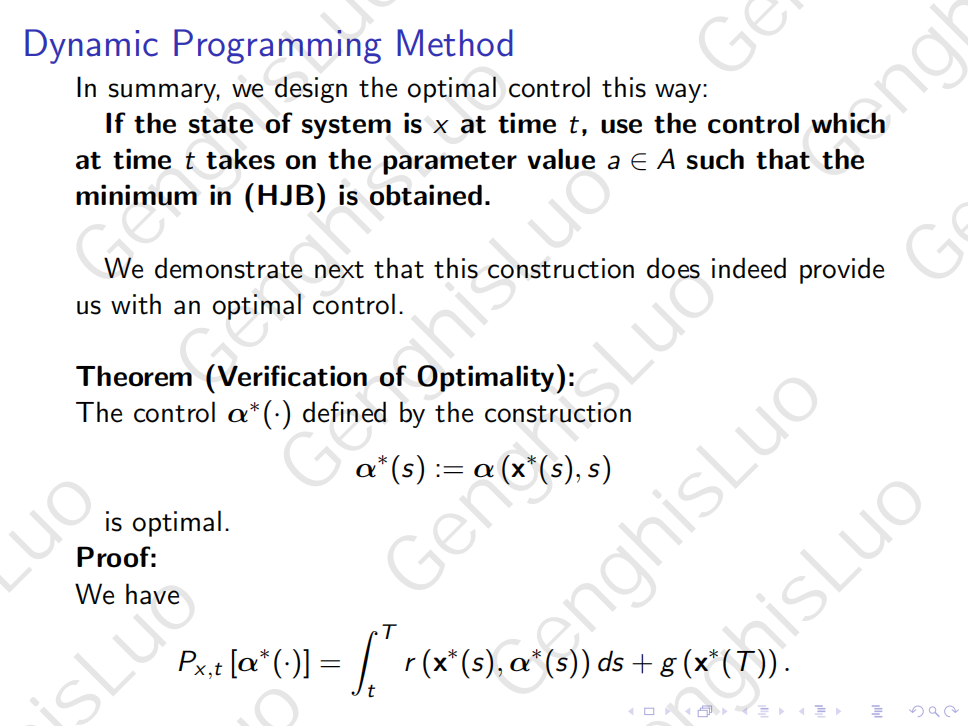

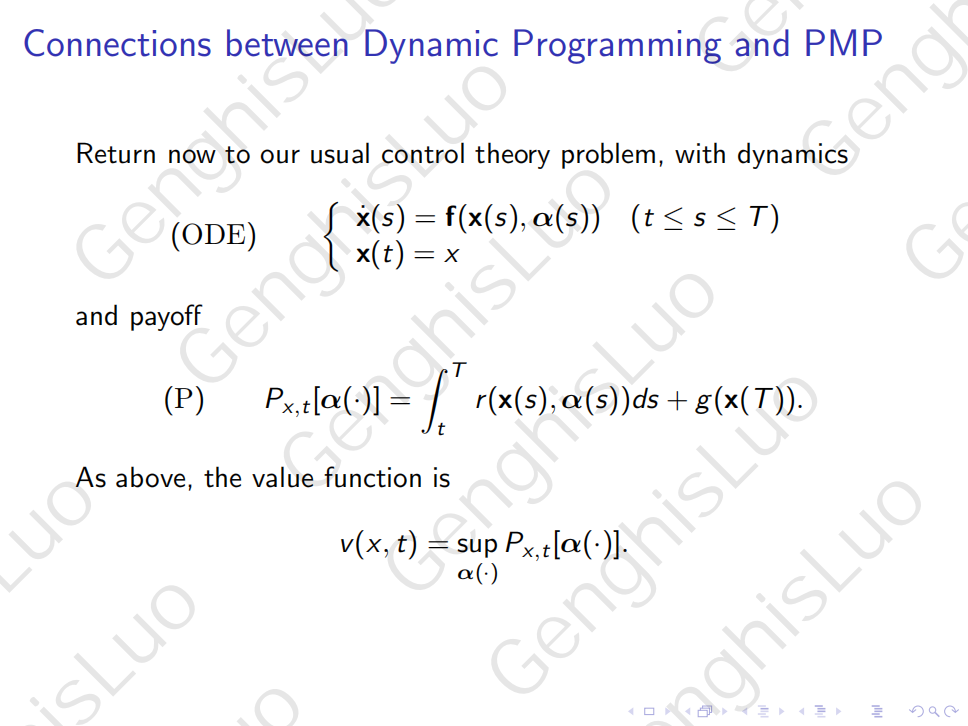

Dynamic Programming

The Dynamic Programming is another very important tool in optimal control theory. The motivation of deriving this method is that in mathematics, it is sometimes easier to solve a problem by embedding it within a larger class of problems and then solve the larger class all at once. In particular for the payoff functional, we variates the state and the time and fix the control by supreme to form a value function, so as to derive the Hamilton-Jacobi-Bellman Equation that the value function satisfies. By solving the value function, we gain firstly the information about the optimal value of payoff functional for variated state and time, hereafter we can reconstruct the feedback optimal control. Intuitively, this is the reversed process of using Pontryagin Maximum Principle to solve optimal control problem. We begin by the definition of the value function, then delve into the formation of Hamilton-Jacobi-Bellman Equation followed by a proof and a verification, and finally raise a proved theorem illustrates the connection between Dynamic Programming and Pontryagin Maximum Principle and present a pradigm of applying Dynamic Programming method to solve optimal control problems.

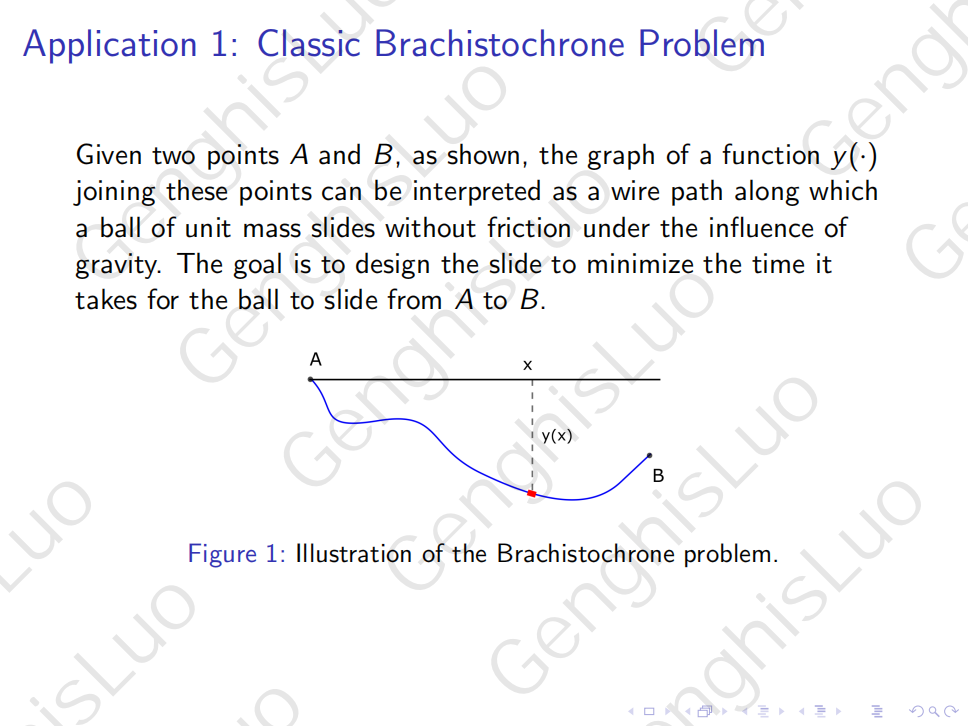

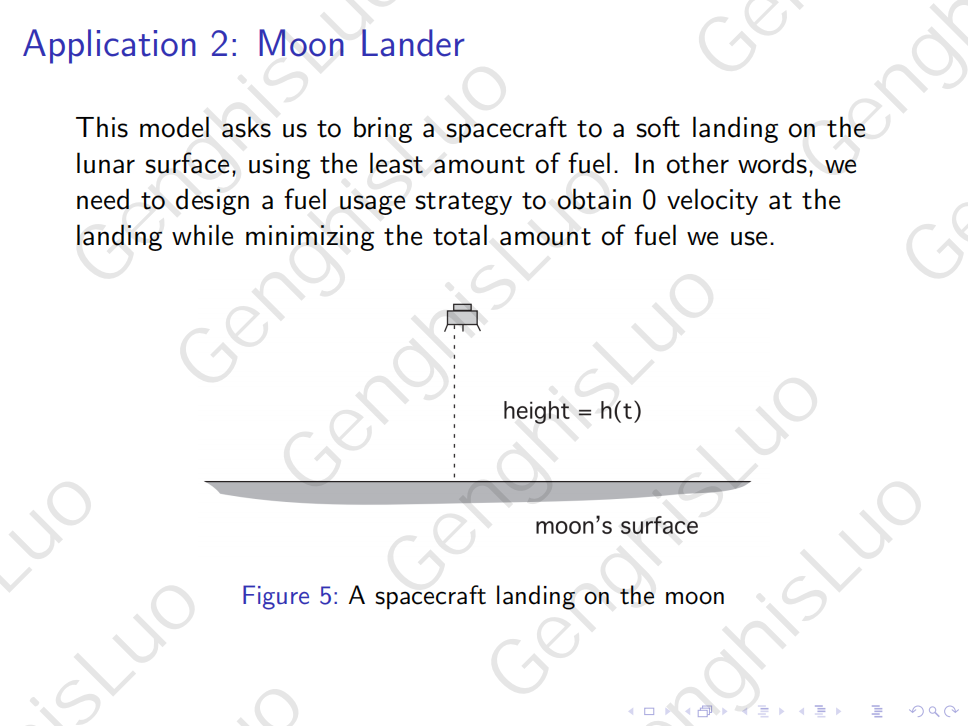

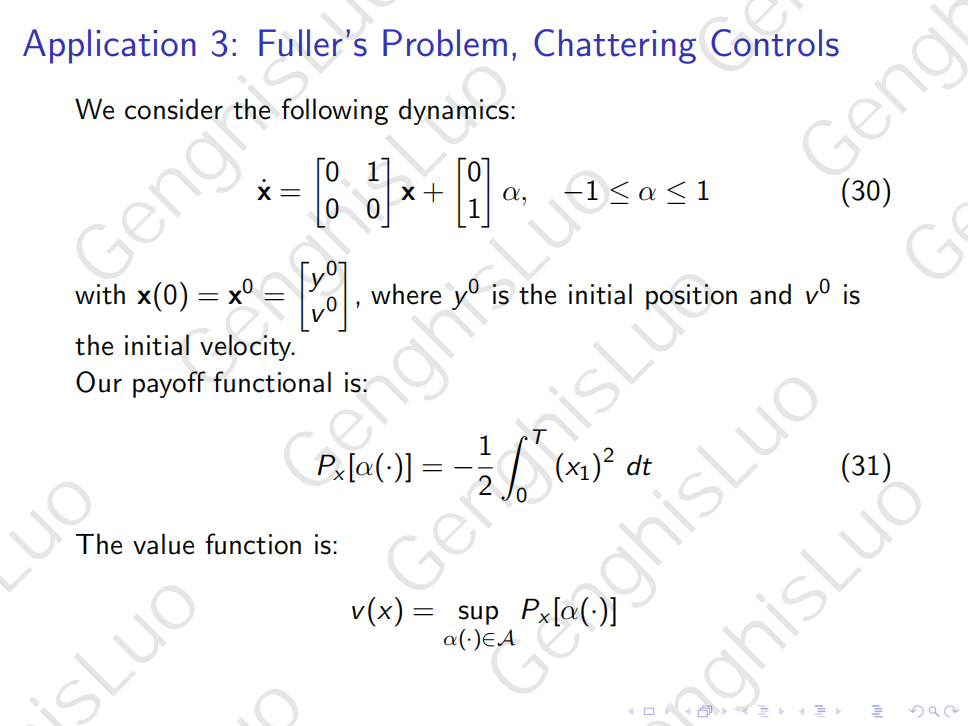

Applications: Brachistochrone, Moon Lander, Chattering Control

We applied the theoretical concepts to analyze three distinct scenario models, which are Brachistochrone problem, Moon Lander problem, Fuller Chattering Control problem. Together, we constructed the dynamic systems and employed optimization techniques such as the Hamilton-Jacobi-Bellman equation and dynamic programming. We derived the form of analytical solutions, and we visualized the results to gain deeper insights into each model. This collaborative effort allowed us to explore the real-world implications and challenges of applying these mathematical methods. To see more details, please contact kl4747@nyu.edu to have the full version of our academic report.